Systems Engineer

The Next Generation

In September, IBM announced the 10th Generation of the IBM Storage DS8000. As of this writing, these enterprise-class storage systems are now officially shipping, and in fact, I have 3 systems that will be arriving at a client site tomorrow! This article will take a deeper dive into the innovation of these storage systems built for IBM Z and IBM i architectures.

System Classes

Just as in the previous few generations of the DS8000, the Gen 10 will have multiple classes of systems to meet different requirements. Currently, there are two versions available:

- DS8A50

This will be the workhorse of the Gen 10 systems. If you’re thinking about the DS8900s, this would, unsurprisingly, be the equivalent of the DS8950. Unlike the DS8950, the 8A50 comes standard with 20 cores per processor node. It can be configured with up to over 3 TiB of cache (3500 GiB) with write cache going up to a maximum of 192 GB in those configurations.

As with the DS8950, it is also possible to add an expansion frame to the 8A50. However, what you may find is that a single frame can meet your capacity needs with this newer generation. Particularly if you take advantage of compression.

In terms of IO ports, the bays hold up to 128 Host Ports, depending on your requirements. The Host Adapter cards are the same ones found in the existing DS8950s (4-port 32Gbps). And the system can use up to 10 ports for zHyperLink (zHL). - DS8A10

For those environments or workloads that don’t require the same amount of power as the A50, the DS8A10 is an effective option. The DS8A10 is the equivalent of the DS8910 model 994. This means that it comes with its own rack but you can expect a rackless version of the Gen10 sometime in 2025.

As with the DS8910, this model is more limited in power. However, it has a bit more power than its predecessor, coming in at 10-cores per node (20 cores total) but it is still limited to 512 GiB of cache. This allows up to 32 GB of write cache. As with the DS8910 it has no option for an expansion frame and it has a maximum of 64 host ports (same 4-port 32 Gbps adapters) and up to 4 zHL ports.

Other models

As mentioned above, in 2025 you can likely expect the launch of a rackless version of the A10. You can also expect an “analytics class” of this system in the same vein as the DS8980. This would provide more processing cores and cache for the highest power of workloads.

Why Power 9+ In Gen 10?

Why Power 9+ In Gen 10?

If you look through the technical details of the Gen 10 you will discover that it uses Power9+ processors instead of Power 10. You may wonder why IBM chose to integrate Power9+ technology instead of the most recent and powerful processor, the Power 10.

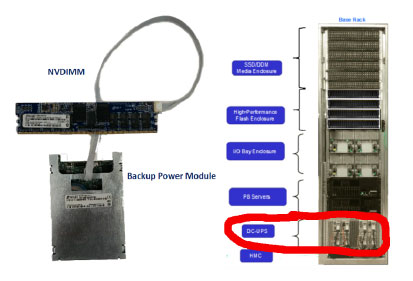

Figure 1- a NVDIMM with Backup Power Module compared to a DS8886 with integrated DC-UPSes

On the contrary, Power 9+ processors use NVDIMMs which enables data to be saved to flash during a power loss and immediately restored during power up. It takes a considerable amount of weight out of the configuration and allows the data to be encrypted as it is written to flash.

New HMCs

The Master Console (HMC) in the DS8000 has always been a custom configuration based on x86 architecture. This has been functional. With added functions, such as IBM Copy Services Manager (CSM), being added to the system, the HMCs have been taken to task.

Figure 2 DS8000 Gen 10 Power 9 HMCs

The HMCs also continue to have CSM installed on them (as it has been since the DS8880 systems), but now it is also fully licensed. The one caveat is that the replication that the included CSM manages needs to be between Gen 10 systems. If you are replicating to a DS8900, for example, it would still require an additional license. But, if you are using it for Safe Guarded Copy, you no longer need to manage it separately.

Change to RAID

Since its creation, the IBM DS8000 systems have used a custom ASIC for its RAID controller. While powerful and reliable, advances in technology have made software-based RAID a better option that has been implemented on other platforms in the IBM portfolio (such as IBM FlashSystems with their Distributed RAID).

With the Gen 10, the IBM DS8000 will follow this change. This should make future development efforts more streamlined, as ASIC design and verification can be time consuming and costly.

What this doesn’t do is change some of the fundamentals of how RAID is constructed on the DS8000. For example, install groups will continue to be 16 drives each, and will be split into 2 RAID groups of 8 drives.

Typically, you will continue to see RAID 6 being used for the Gen 10, with rare instances where RAID 10 might be required. There is no longer support for RAID 5 with the new RAID code but research across the industry has shown that RAID 5’s lack of double parity has too much risk compared to RAID 6, especially with drives of the size that we’re using in these systems.

The Future of Easy Tier

Easy Tier has always been a great feature of the DS8000 and it will continue to be integral to the design of the system. However, over the past several years IBM has learned quite a bit about how it can best be used in a world that is entirely Flash-based.

When Easy Tier was first introduced, Flash was the exception in a world filled with spinning drives. In this world, having a small amount of Flash was going to be expensive, and it made more sense to allow the most heavily accessed data to move to that tier of storage while allowing other data to stay on enterprise or NL-SAS drives.

But, the storage world has evolved and IBM has seen minimal to no appreciable user benefit to move extents between different tiers of flash. At best, it provides a very small amount of performance benefit; at worst, it could create a situation where the DS8000 is needlessly using resources to move extents around for no noticeable benefit.

The recommendation isn’t new. It has been part of the IBM Storage Modeller (StorM) tool for some time now. But with the Gen 10s you will no longer be using Easy Tier to move data between drive tiers. So, you’ll want to figure out the tier of drive that matches what you need for performance and capacity from the beginning and stick with that. Additional tiers or types of drives will need to be kept in separate extent pools.

What Easy Tier will continue to do is rebalance the extents. This will allow the system to optimally perform as you add capacity and as data is written and deleted.

What about the FlashCore Modules?

The ability of the Gen 10 systems to use 4th Generation IBM FlashCore Modules (FCMs) is one of the most eagerly anticipated features of the new system. So, it’s worth looking at what can be expected from this in a fair amount of detail.

- What are FlashCore Modules?

FCMs are an IBM designed and manufactured form of Flash drive. In the current generation they are based on a unique combination of Single Level Cell (SLC) and Quad Level Cell (QLC) Flash technology. They stem from IBM’s acquisition of Texas Memory Systems way back in 2012. Core parts of the technology have been used in multiple generations of IBM’s FlashSystems storage, starting from the “Micro Latency Modules” used in FlashSystem 900 and A9000s, then into the NVMe based Storwize V7000 Gen 3s with the first generation of FCMs, and continuing to develop in the current version of IBM FlashSystems. So, this hardware has been field tested and found to be exceptionally reliable.

The key features of the FCMs are that they do Encryption and Compression in the hardware. Yes, you read that correctly. The FCMs will compress anything that is written to them in the hardware and not require any additional processing in the software. This means that there’s no performance penalty for what can be a very costly process when performed in software.

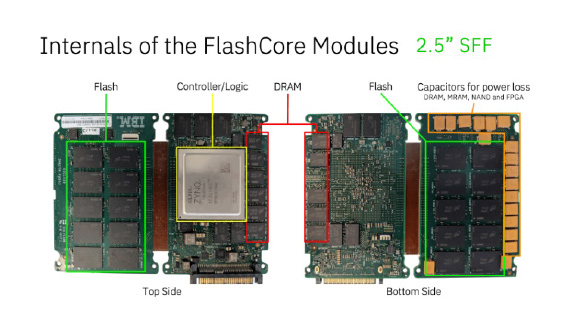

FCMs can do this because of the additional computational power that they have built into their hardware. As you can see in the diagram, the actual Flash component of the drive is only one relatively small part of the drive. The FCMs also have their own compute hardware that enables compression and opens the door to other capabilities as well.

Figure 3 FCM4 layout and components

For instance, one feature (not currently available on the DS8000) that was recently introduced into the FCM with the release of the FCM4 is ransomware detection. In combination with IBM Storage Insights, the FCM4s are capable of detecting, at the block level, whether writes are being made in a suspicious manner. This can allow the IBM Storage systems to take action to protect the data when something, such as malware, could be compromising the integrity of the data. It could, as one example, automatically take a Safeguarded Copy.

- Changes to storage management with FCMs

By nature, pools that are built with FCMs will be using Extent Space Efficient Volumes. So, everything that you build in your pools will be thin provisioned. The capacity you allocate for the volumes will be provided as it is needed.

This means that you can overprovision your storage: You could provision more capacity in the Extent Space Efficient volumes than exists on the system. So, you need to make sure that you understand how much actual capacity you require. It also means that the capacity that isn’t being used in the volumes that you’ve created is available as free capacity. This will increase the savings over and above what you realize from compression.

Finally, you need to build space release into your procedures. If capacity is no longer being used because you’ve deleted a volume, you want to make sure that the capacity is released back into the pool.

Compression – What can I expect?

Compression is one of those things where the answer is always “It depends”.

The first question to ask is whether your data is being compressed or encrypted in the host or application. This is key because something that is already compressed will not compress very well. Encryption is just random strings of 1s and 0s, so you will get no compression from encrypted data.

When you get past this question, though, there’s still more work to be done.

- IBM z mainframe

In IBM z, there have been changes made to DFSMS to allow for compression sizing. If you apply APAR OA66417, you will have access to the Compression Tool. If you’ve used compression on FlashSystems in the past, you may know the same concept as “Compresstimator”.

Running the tool against the volumes that you want to evaluate will provide output indicating how much you will save from thin provisioning and compression.

- Linux and Windows

For traditional open systems (Linux/Windows) you can take advantage of the IBM “Compresstimator” tool. Installing it will allow it to scan the volumes that the host can see. This will provide capacity savings details for compression and thin provisioning.

- Other platforms

For other platforms, your best bet is to take a representative sample of your data and use an external compression tool.

For example, on IBM i, I recently conferred with a customer who was able to take their main database and use the SAVLIB tool on it. They did this with the most conservative compression setting and found that they had a 53% compression ratio. Subject Matter Experts from IBM on both the Storage and IBM i side confirmed that this compression ratio would be representative of what they could expect.

One important thing to remember, though, is that not all data compresses well. So, in the example above, I separated out the portion that was used for image data (which would already be in a compressed format) so that it would not be considered in the 53% data reduction.

Capacity in the Gen 10 systems

You might find that you don’t need as big of a system anymore with Gen 10, even if you are not using FCM4s. That is to say, your capacity needs may be the same or even bigger than before but where you may have previously needed an expansion frame, suddenly you have a system with empty space for additional drives in a single frame.

The new High Performance Flash Enclosures (HPFE Gen 3) are built on PCIe Gen 4. This gives them substantially more throughput capability than previous generations. What I’ve seen in performance modeling is that it is worth looking at large drives if they fit your capacity needs. In the configurations that I’ve had modeled, I have not seen a performance benefit when using the faster 19.2 TB FCM vs. a 4.8 TB FCM. The only real difference that I’ve seen is how much capacity you get if you add additional capacity, since adding (16) 19.2 TB FCMs, depending on whether they need spares in a new enclosure pair, will be a very large amount of capacity even before compression.

The additional bandwidth will also help to allow better use of Safe Guarded Copy, because there is a much larger pipe on the backend of the system. So, as your needs for Cyber Resiliency increase, you’ll be able to take more backups with greater frequency.

Summary

Obviously, this is a lot of information. To boil it down, I offer the following:

- The new systems are very much worth your consideration.

- Consider the benefits of compression, but make sure that you size around what you can reasonably expect from the compression. For example, if you know that you’re storing image files, take that into account since it won’t result in additional compression benefit.

- Use FCMs if you can; both for the compression now and future capabilities like malware detection.

- Pick the right size of drive to start. You’re not likely to see any performance benefit for using the “fastest” of the industry standard drives, so it really comes down to how much capacity you want to add to the system.

- The gating factor on performance is the enclosure pairs with a recommendation of 2 drive sets per enclosure pair; and they have so much bandwidth they are challenging to push.

For example, I had requests to size based on 19.2 TB FCMs, vs. 9.6, vs. 4.8s to see if there was any benefit to further spreading out the workload. In the samples that I modeled, I didn’t see any performance benefits. Just benefit in granularity for adding capacity.

Finally, be aware that as a new generation of IBM Storage DS8000, and keeping in the tradition of previous DS8000 generations, the new code level shipping with these systems (10.0) is designed to be stable. This means that it doesn’t have the full functionality of the latest release of DS8900 code. When 10.1 is released in 2025, it will get to feature parity with 9.4 and will begin adding additional features.

Enterprise Storage and Data Solutions

Mainline offers cyber storage solutions that increase workload efficiency of today’s data intensive enterprises. As a Platinum level IBM Business Partner, the highest partnership level in the IBM PartnerWorld program, and having deep knowledge across the entire spectrum of enterprise storage solutions, we help our clients select and acquire the best offerings to suit their business needs and budget.

Our experts can assist you from the initial assessment through post-implementation support to include services that can help you maximize and sustain business efficiencies. Contact your Mainline Account Representative directly or reach out to us here with any questions.

You may be interested in

Video: IBM DS8000 Copy Services – Replication Options

BLOG: Detecting Cyber Attacks in Under a Minute: Introducing IBM FlashCore Modules Gen4